A C++ Data Stream Processing Parallel Library for Multicores and GPUs

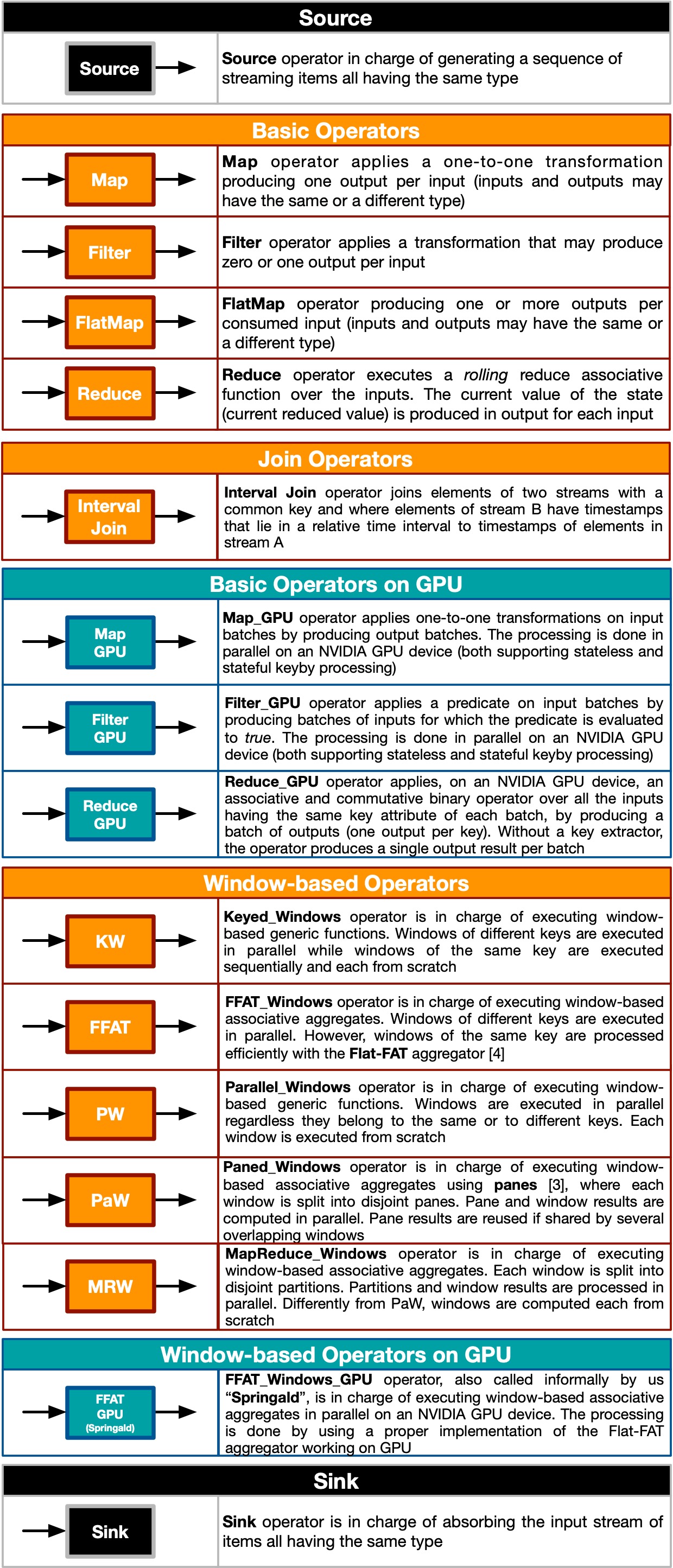

Operators

WindFlow provides a rich set of operators similar to those found in popular streaming frameworks (e.g., Apache Flink). The figure below shows the list of operators along with a brief description of eac

Operator Parallelism

Sources, the other basic operators, and sinks have straightforward semantics for programmers who are familiar with open-source stream processing frameworks. When instantiating these components, the programmer can specify a parallelism level (i.e., the number of replicas). Each replica receives a portion of the input stream, allowing inputs to be processed in parallel and improving throughput. The Map, Filter, FlatMap, and Sink operators can be configured to work on a key-by basis, meaning that all inputs with the same key attribute are delivered to the same destination replica by the preceding operator. The Reduce operator always operates on a key-by basis.

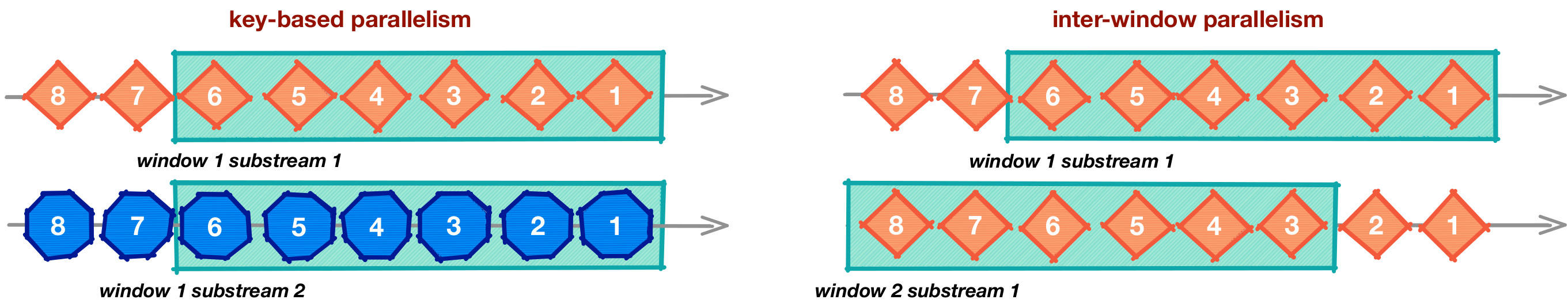

In addition, the library provides a set of window-based operators that offer useful programming abstractions [1,2] for expressing different kinds of parallelism strategies aimed at solving window-based streaming analytics tasks:

- Key-based parallelism: this is the default parallelism pattern exploited in stream processing systems that handle stateful computations. The assumption is that the stream contains input items belonging to multiple logical substreams, each composed of items that share the same value of a key attribute (a.k.a. keyed streams). The idea is to process sliding windows belonging to different substreams in parallel. The default operator enabling this behavior is Keyed_Windows (KW).

- Inter-window parallelism: in the case of high-speed streams, sliding windows may become ready for computation very frequently. The idea is to divide the set of windows to be computed among multiple parallel entities, allowing them to process distinct windows in parallel (regardless of whether they belong to different or the same keyed substream). The operators enabling this behavior are Parallel_Windows (PW) and Paned_Windows (PaW).

- Intra-window parallelism: the idea is to parallelize the computation within each window by splitting its content into partitions that are processed concurrently. The results of these partitions are then combined to produce the final window-level result. The operators enabling this behavior are Paned_Windows (PaW) and MapReduce_Windows (MRW).

The idea behind these approaches is illustrated in the figure below. For simplicity, we present an example using count-based windows of length six items, sliding every two items. However, the library also supports time-based windows (currently with event-time semantics only), which can be instantiated with both non-incremental and incremental queries.

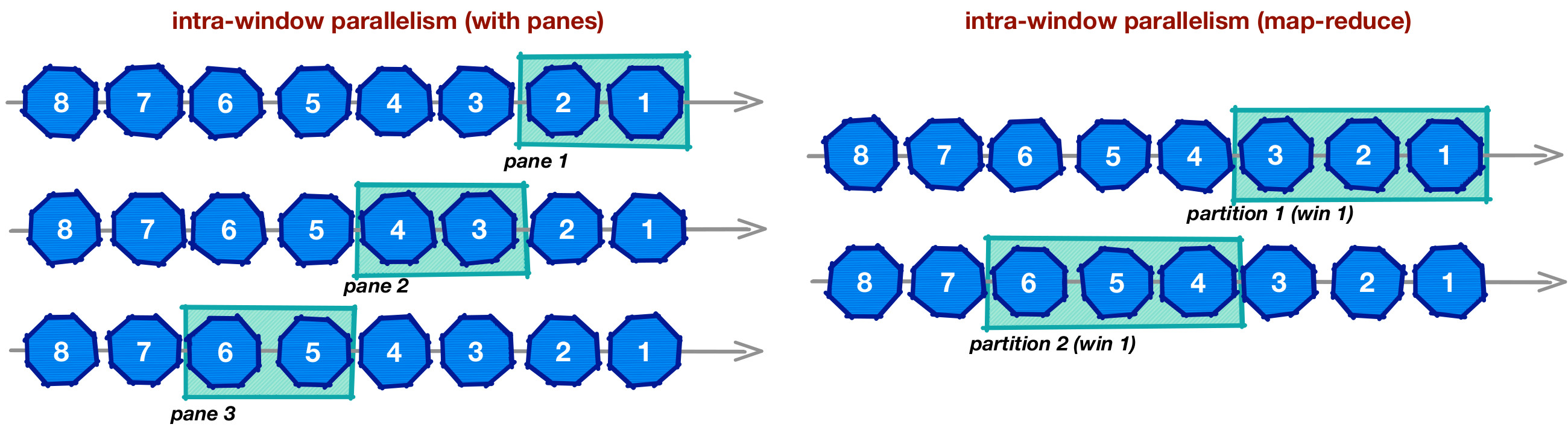

Intra-window parallelism requires additional explanation. The library supports operators that enable parallel processing within each window. The first approach is based on the paned technique [3], in which each window is divided into tumbling subwindows, called panes, whose length is equal to the greatest common divisor between the window length and its slide (in the example, each pane is two items long). The operator computes a partial result for each pane—processing them in parallel when possible—and then aggregates these pane results to produce the final window-level output (in the example, three pane results are needed per window). A notable advantage of this approach is that pane results shared across consecutive windows do not need to be recomputed, thereby improving computational performance.

The second approach to intra-window parallelism is based on the map-reduce pattern: each window is divided into partitions, and a partial result is computed for each partition. The partition results are then aggregated to produce the final window-level output. Although similar to the paned approach, in this case the size of the partitions depends on the number of parallel replicas involved in processing the operator (two in the figure below, right-hand side), making it possible to reduce processing latency proportionally to the operator’s parallelism level. However, unlike the paned approach, windows must be recomputed from scratch—albeit in parallel.

It is worth noting that existing frameworks do not provide all these parallel operators in an integrated and easy-to-use manner. For example, Apache Storm and Apache Flink offer native support for key-based parallelism, while intra-window parallelism is naturally supported in Spark Streaming as an extension of the Spark engine’s batch-processing and map-reduce capabilities.

Starting from version 2.8 of the library, WindFlow provides an additional window-based operator called FFAT_Windows. Similar to the Keyed_Windows operator, it processes windows from different keyed substreams in parallel on different CPU cores. However, windows belonging to the same substream are processed using the Flat Fixed-size Aggregator (Flat-FAT) approach, which is based on balanced binary trees. This method avoids recomputing windows from scratch. Further details can be found in the paper that first introduced this approach [4].

GPU Operators

WindFlow version 3.x supports operators designed for NVIDIA GPUs. The idea is to accelerate the execution of computationally intensive operators by leveraging GPUs together with small batching techniques. A GPU operator receives batches of inputs from the preceding operators in the dataflow graph and processes each batch in parallel on the GPU.

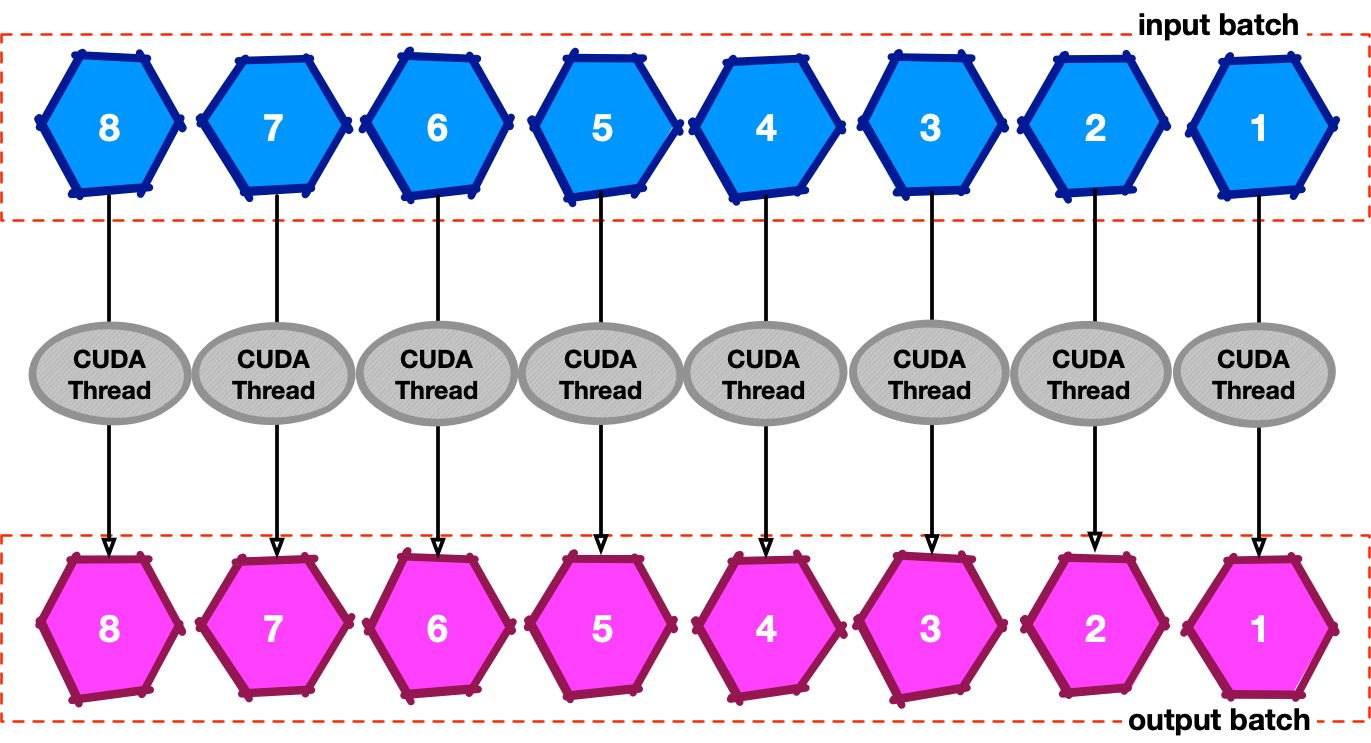

For stateless operators—that is, those created without a key-by modifier—all inputs within a batch are processed in parallel on the GPU, with each input handled by a separate CUDA thread. This idea is illustrated in the figure below.

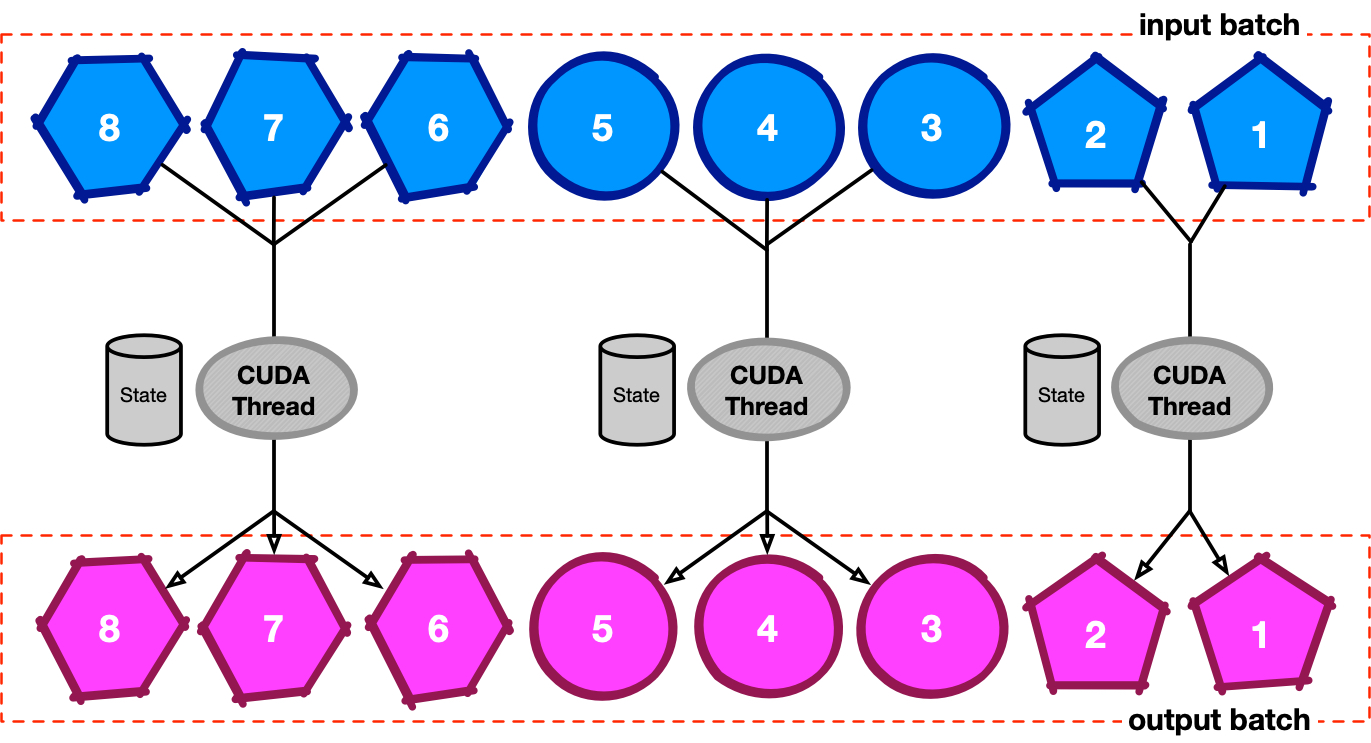

Stateful operators require a key-by distribution and maintain a state partition for each key. The general requirement is that all inputs belonging to the same substream must be processed sequentially, since they read and modify the associated state. WindFlow provides an effective approach to achieve this on GPUs by keeping a device-side object per key to represent the state partition, and by enforcing stateful constraints directly within the design of its internal CUDA kernels.

Starting from release 3.0.0, WindFlow supports both Map and Filter operators on GPUs. Additionally, a GPU variant of FFAT_Windows (called FFAT_Windows_GPU) is provided, which uses a modified version of the FlatFAT structure designed for efficient computation on GPUs.

References

Useful references:

- G. Mencagli, M. Torquati, D. Griebler, M. Danelutto and L. G. L. Fernandes. Raising the Parallel Abstraction Level for Streaming Analytics Applications. IEEE Access, vol. 7, pp. 131944-131961, 2019. DOI: 10.1109/ACCESS.2019.2941183

- Tiziano Matteis and Gabriele Mencagli. 2017. Parallel Patterns for Window-Based Stateful Operators on Data Streams: An Algorithmic Skeleton Approach. Int. J. Parallel Program. 45, 2 (April 2017), 382-401. DOI: https://doi.org/10.1007/s10766-016-0413-x

- Jin Li, David Maier, Kristin Tufte, Vassilis Papadimos, and Peter A. Tucker. 2005. No pane, no gain: efficient evaluation of sliding-window aggregates over data streams. SIGMOD Rec. 34, 1 (March 2005), 39-44. DOI: http://dx.doi.org/10.1145/1058150.1058158

- Kanat Tangwongsan, Martin Hirzel, Scott Schneider, and Kun-Lung Wu. 2015. General incremental sliding-window aggregation. Proc. VLDB Endow. 8, 7 (February 2015), 702–713. DOI:https://doi.org/10.14778/2752939.2752940

- T. De Matteis, G. Mencagli, D. De Sensi, M. Torquati and M. Danelutto. GASSER: An Auto-Tunable System for General Sliding-Window Streaming Operators on GPUs. IEEE Access, vol. 7, pp. 48753-48769, 2019. DOI: 10.1109/ACCESS.2019.2910312